Problem Statement:

Provide compute solutions using Hadoop managed Big Data service and an open-source workflow management platform along with Transient Cluster implementation to reduce dependencies on Persistent Hadoop Clusters and implement effective cost reductions enhancing overall workflows.

Using AWS Managed Service EMR as a big-data Hadoop platform alongside Apache Airflow as workflow manager to initiate Transient Clusters using Jenkins API to trigger Terraform modules to communicate with AWS Infrastructure Services.

Services Used:

The implementation is made up of below mentioned services:

- Apache Airflow: Airflow is an open-source platform to programmatically author, schedule and monitor workflows as Directed Acyclic Graphs (DAGs) of tasks. The Airflow scheduler will execute tasks like triggering Jenkins EMR Creation, submitting Jobs over to EMR using Dags utility and then terminating Clusters after job completion.

- AWS EMR: Amazon EMR is a managed cluster platform that simplifies running big data frameworks, such as Apache Hadoop, on AWS to process and analyse vast amounts of data. Additionally, you can use Amazon EMR to transform and move large amounts of data into and out of other AWS data stores and databases, such as Amazon S3.

- AWS S3: S3 (Simple Storage Service)is storage for the Internet. You can use Amazon S3 to store and retrieve any amount of data at any time, from anywhere on the web.

- Jenkins: It is a free and open source automation server which helps in automating the parts of software development related to building, testing, and deploying continuous integration and continuous delivery (CI/CD).

- Terraform: Terraform is an open-source infrastructure as code software tool created by HashiCorp. Users define and provide data center infrastructure using a declarative configuration language known as HashiCorp Configuration Language.

- Ansible : Ansible is an open-source software provisioning, configuration management, and application-deployment tool enabling infrastructure as code. It runs on many Unix-like systems, and can configure both Unix-like systems as well as Microsoft Windows.

- Git: Git is software for tracking changes in any set of files, usually used for coordinating work among programmers collaboratively developing source code during software development.

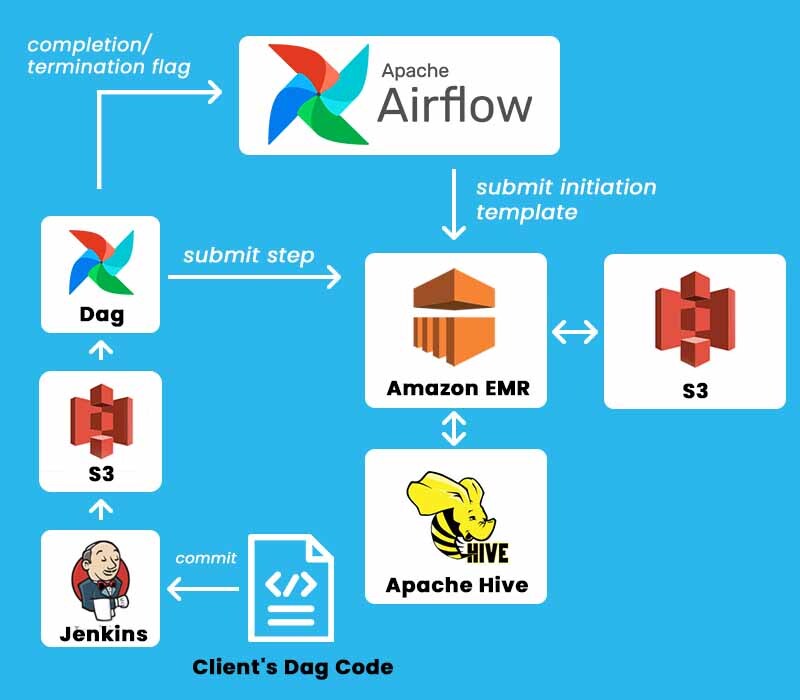

Work Flow Diagram:

Work Flow Orchestration:

The following use case demonstrates how you can use combination of multiple DevOps Technologies in order to orchestrate end-to-end management of Big Data workflows using Amazon EMR as clusters management service:

Jenkins Trigger for infra Creation:

The implementation aims at triggering the Jenkins API in order to initiate AWS EMR Hadoop cluster creation through Terraform module. Once the developer’s Airflow Dag code triggers API a Jenkins job starts up which then pulls out the latest Terraform module from Git Repo’s specific branch that is dedicated to the specific project and contains infrastructure provisioning information for creation of Specific Sized EMR Clusters. After the Jenkins job is triggered Airflow dag code waits up for successful creation and waits for Jenkins Jobs status before proceeding further.

Terraform Module:

The Terraform module contains overall information for setting up infrastructure in specific AWS VPC and Subnet Environments alongside specific versions of EMR Applications including Hadoop, Spark, Zeppelin, JupyterHub, Livy etc. which are inter-compatible with each other. This module also contains information related to the bootstrapping process which empowers the user to implement certain use case specific level changes over to EMR Cluster which is currently in creation state.

Monitoring Implementation & DNS mapping:

After the successful creation of EMR and setup of Hadoop services over provisioned infra, the bootstrap utility provides the user with option to trigger monitoring solutions to publish metrics and utilisation information over to Prometheus which in current use case is implemented using Ansible module kept up in S3 bucket which gets pulled and runs over all cluster nodes. Finally, Terraform module attaches a specific DNS Record from AWS Route53 Service over to EMR Master node where the developer’s code will submit workflow related jobs.

Cluster Termination:

Once Airflow receives success status from Jenkins API it moves forward with workflow execution over specified Livy DNS Address. After successful completion of overall workflow Airflow’s final task is to trigger EMR termination Jenkins API or upon failure trigger information mails to owner teams.

Achievements:

- Automated complete end-to-end Big Data Hadoop Transient Clusters workflow using AWS managed service EMR.

- Monthly Cost Sa ved of around $10K by workflow movement over end-to-end Transient Clusters removing workload and dependence on Persistent Clusters.

- Reduced the entire implementation timeframe in which everyday Cluster Setup and Termination is being handled without any human intervention.

- Complete adoption of newer technologies and versions resulting in dilution of legacy infrastructure.

- On another end, achieved larger optimisation in workflow job execution time frames by around 15-20%.